The world is becoming increasingly concerned with AI. Between the UK’s recent summit to pop-culture dystopia films adaptations (think the new ‘Mission: Impossible’ film), it’s clear that this is the case. In spite of this concern, AI currently remains largely unregulated. However, EU MEP Dragoș Tudorache now suggests upcoming regulations.

The Guardian interviewed Tudorache on the subject. He spoke from his office at the heart of the EU, in Brussels. Claiming “we are in touching distance”, Tudorache announced that “a good 60-70% of text is already agreed” in relation to the proposed legislation. Continuing, he went on to describe the ongoing threat that AI poses. “You could grow your own little monster in your kitchen,” as Tudorache put it, describing the need for regulation.

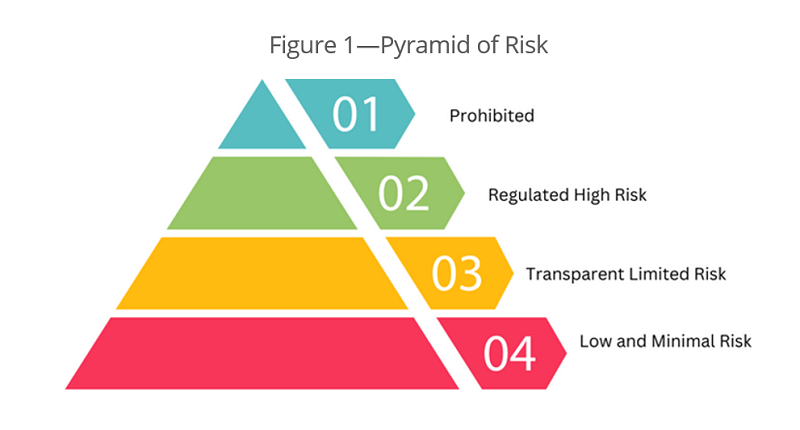

But what exactly are these “little monsters”? The proposed legislation would classify AI systems into four different levels of risk, ranging between “unacceptable” to “minimal or low risk”. From there, each level would be regulated accordingly.

An example of a prohibited threat, for instance, may be biometric identification systems—such as fingerprints or facial recognition. This is something 81% of phones use, according to tech company Cisco. On the other end of the scale, the European Commision claims the vast majority of the EU’s AI systems would be classed as low or minimal risk threats. To give an example, video game AI would remain under the lowest regulation level, thus not facing any extra regulations.

Nonetheless, as the EU races ahead in creating legislation, this raises questions on the impacts on both the EU and elsewhere. One point Tudorache raised was energy: “I want transparency on energy.” Though the 8 largest consumers of energy are currently non-EU countries, Tudorache continues “Energy is an open market so the AI Act won’t stop energy use, but if there is an onus on companies publishing data on energy use, that way you can build awareness and shape public policy.” And now with the recent international declaration agreeing to the capacity of disaster by AI, there appears to be movement on a global scale against AI risk. On a national scale, too, the hegemony power of the US recently saw its President signing executive AI orders.

As for the UK: despite the recent AI summit, the UK has yet to introduce any regulation. Nor is there any intention to introduce any, according to a white paper published earlier this year. This is contrary to the EU’s approach, which the UK would be following if it were still a member. At the University of Southampton, Regius Professor Dame Wendy Hall argued “it’s far too early in the AI development cycle for us to know definitively how to regulate it,” in favour of the UK’s approach.

Despite the recent AI summit, the UK has yet to introduce any regulation. Nor is there any intention to introduce any.

The future of AI comes with the fear of the unknown, regulations, and a lot of controversy. By the end of this year, the European Parliament aims to reach an agreement on its regulation. Should it come into effect, one thing that is for certain is that it would change the way AI is dealt with within the EU — if not globally.